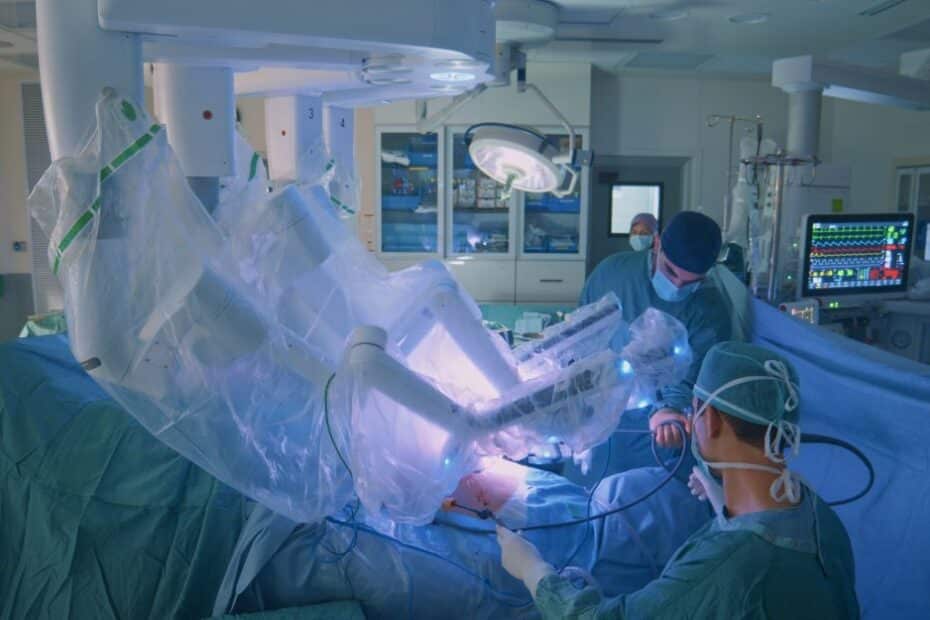

The integration of artificial intelligence (AI) into medical practice and surgical procedures has brought about groundbreaking advancements in medical technology, enhancing precision and efficiency. However, as AI assumes a more prominent role in the operating room – as it does in many other areas of society – questions surrounding legal liability in the event of errors in its content or use.

AI is another example of technology that has evolved and developed so rapidly, it is largely ahead of our legal system, including in the health industry. This article explores some of the legal implications in Australia concerning the use of AI in surgical procedures and how liability is determined when an AI system makes an error resulting in personal injury.

What is the current legal situation in Australia regarding AI and surgery?

Australia currently lacks AI-specific legislation directly addressing the liability arising from the use of AI in surgical procedures.

‘There is no current legislation saying that doctors should tell patients if they are using AI in their treatment or care; but legislation is likely to be considered in the future and will be a work in progress,’ developmental paediatrician Dr Sandra Johnson told the Australian Institute of Digital Health in 2023.

Despite the lack of specific legislation, the general legal principles of negligence and product liability law still apply. Australia’s legal system considers the duty of care owed by healthcare professionals, manufacturers, and the potential impact of AI forms part of that consideration.

Liability of healthcare professionals

In traditional surgical procedures, the primary responsibility for the success and safety of the operation rests with the healthcare professionals involved. The same principles apply when AI is incorporated into surgical practices. Surgeons, anesthetists, and other medical staff owe a duty of care to their patients. If an AI system contributes to a mistake or error during surgery, the healthcare professionals overseeing the procedure could be held liable for negligence.

However, determining liability may be complicated when AI is involved, as it introduces the element of machine decision-making. The evolving nature of AI technology raises questions about the level of control and supervision exercised by healthcare professionals over AI systems. A good example would be where AI is used as an interpreting tool for assessing medical imaging.

Product liability for AI systems

One facet of liability in AI-assisted surgical procedures is the potential responsibility of manufacturers and developers of AI systems. If an AI system is deemed a defective product and causes harm during surgery, product liability laws may come into play. Manufacturers may be held accountable for faulty design, inadequate testing, or failure to provide adequate warnings regarding the AI system’s capabilities and limitations.

Proving a defect in an AI system can be challenging, as it may require a detailed understanding of the technology and its intended use. Additionally, questions may arise about whether the defect was caused by a flaw in the original design or a failure to update the AI system to reflect advancements in medical knowledge.

Establishing causation in medical negligence cases

In any medical negligence case, including those involving AI, establishing causation and proximate cause is crucial. Plaintiffs must demonstrate that the AI system’s error or its use on lack of overriding supervision in a clinical setting was a cause of the personal injury suffered during surgery. The complexity of AI algorithms may introduce challenges in proving causation, as it requires a clear understanding of the technology and its role in the medical process.

Furthermore, establishing liability involves demonstrating that the harm suffered was a foreseeable consequence of the AI system’s error. This may involve assessing whether the healthcare professionals involved were aware of the potential risks associated with the AI technology and whether they took appropriate steps to mitigate those risks.

Healthcare professionals and AI manufacturers may assert various defences in response to allegations of liability, including that the AI system was used in accordance with its intended purpose, that it was operated correctly, or that the personal injury resulted from factors unrelated to the AI system’s functioning.

Furthermore, contractual agreements between healthcare providers and AI system manufacturers may allocate responsibilities and liabilities. Such agreements may contain indemnification clauses or other provisions specifying the scope of responsibility for errors. In the present circumstances, it is difficult to see how the ultimate responsibility does not rest with the health care provider.

What does the future hold?

As AI technology continues to advance, Australia’s legal system will likely need to consider enacting specific regulations addressing liability concerns around AI and its use in medicine and allied health. Clear guidelines could help define the responsibilities of healthcare professionals and manufacturers when AI is involved in medical interventions. Additionally, establishing standards for testing, updating, and maintaining AI systems in the healthcare sector could contribute to enhanced safety and reduced legal ambiguity.

Speak with our expert team

The increasing integration of artificial intelligence into surgical procedures in Australia raises important legal questions which will need to be addressed in the coming years. For now, existing ways of apportioning liability where a duty of care between healthcare provider and patient is breached will have to suffice.

If you have more questions about this topic, please contact our professional team at BPC Lawyers to discuss your case.